[A Cloud Guru, Linux Academy] Внедрение полного конвейера CI/CD [RUS, 2020]

08. Мониторинг

Запускаю локальный kubernetes кластер

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master.k8s Ready control-plane,master 39h v1.20.1

node1.k8s Ready <none> 39h v1.20.1

node2.k8s Ready <none> 39h v1.20.1

Устанавливаю Helm3 на localhost.

$ mkdir ~/kubernetes-configs && cd ~/kubernetes-configs

01. Prometheus

$ vi prometheus-values.yml

alertmanager:

persistentVolume:

enabled: false

server:

persistentVolume:

enabled: false

$ helm search repo prometheus

$ kubectl create namespace prometheus

$ helm install prometheus \

-f prometheus-values.yml \

stable/prometheus \

--namespace prometheus

$ kubectl get pods -n prometheus

$ helm list -n prometheus

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

prometheus prometheus 1 2021-01-12 15:11:13.92312402 +0300 MSK deployed prometheus-11.12.1 2.20.1

02. Grafana

$ vi grafana-values.yml

adminPassword: password

$ kubectl create namespace grafana

$ helm install grafana \

-f grafana-values.yml \

stable/grafana \

--namespace grafana

$ export POD_NAME=$(kubectl get pods --namespace prometheus -l "app=prometheus,component=server" -o jsonpath="{.items[0].metadata.name}")

$ kubectl --namespace prometheus port-forward $POD_NAME 9090

http://localhost:9090/graph

$ kubectl port-forward --namespace grafana service/grafana 3000:80

http://localhost:3000/

Add data source - Prometheus

Name: Prometheus

URL: http://prometheus-server.prometheus.svc.cluster.local

Save and test

Мониторинг кластера

http://grafana.com/dashboards/3131

Grafana -> + -> Import -> 3131

Мониторинг приложений

Разворачиваем приложение как в прошлый раз:

https://github.com/linuxacademy/cicd-pipeline-train-schedule-monitoring

$ cat << 'EOF' | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: train-schedule-service

annotations:

prometheus.io/scrape: 'true'

spec:

type: NodePort

selector:

app: train-schedule

ports:

- protocol: TCP

port: 8080

nodePort: 30002

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: train-schedule-deployment

labels:

app: train-schedule

spec:

replicas: 2

selector:

matchLabels:

app: train-schedule

template:

metadata:

labels:

app: train-schedule

spec:

containers:

- name: train-schedule

image: linuxacademycontent/train-schedule:1

ports:

- containerPort: 8080

EOF

http://node1.k8s:30002/

http://node1.k8s:30002/metrics

Метрики работают!

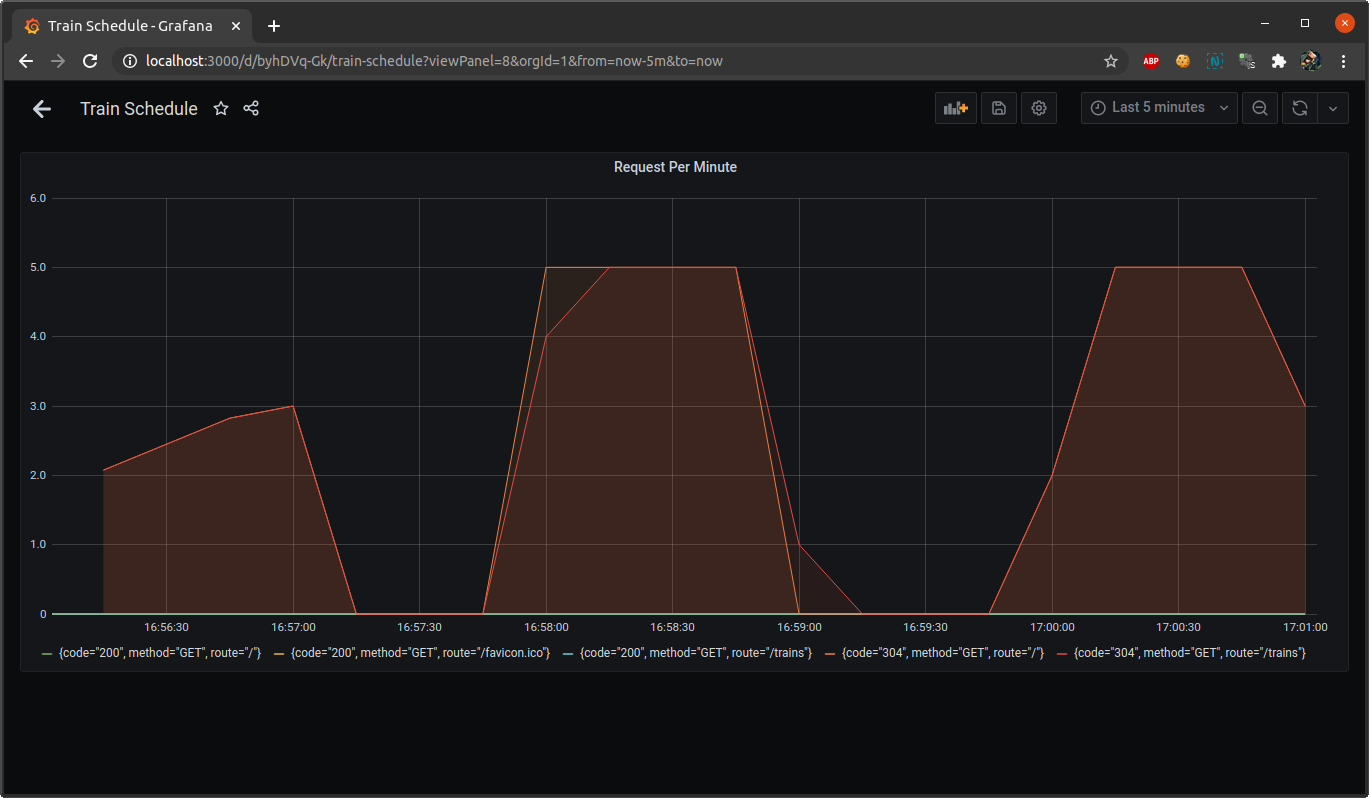

Grafana -> + -> Create -> Dashboard

sum(rate(http_request_duration_ms_count[2m])) by (service, route, method,code) * 60

Для алертов отдельная вкладка.

Документация Grafana по оповещениям:

http://docs.grafana.org/alerting/rules/

Удаляю созданные ресурсы

$ kubectl delete svc train-schedule-service

$ kubectl delete deployment train-schedule-deployment