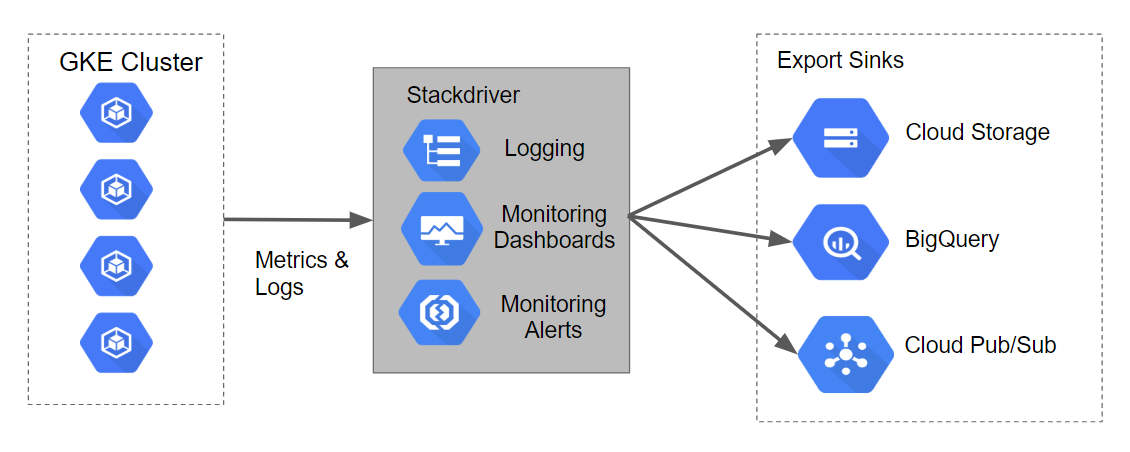

[GSP483] Logging with Stackdriver on Kubernetes Engine

Делаю:

04.05.2019

https://www.qwiklabs.com/focuses/5539?parent=catalog

Install Terraform

$ git clone https://github.com/GoogleCloudPlatform/gke-logging-sinks-demo

$ cd gke-logging-sinks-demo

$ gcloud config set compute/region us-central1

$ gcloud config set compute/zone us-central1-a

Deployment

There are three Terraform files provided with this lab example. The first one, main.tf, is the starting point for Terraform. It describes the features that will be used, the resources that will be manipulated, and the outputs that will result. The second file is provider.tf, which indicates which cloud provider and version will be the target of the Terraform commands–in this case GCP. The final file is variables.tf, which contains a list of variables that are used as inputs into Terraform. Any variables referenced in the main.tf that do not have defaults configured in variables.tf will result in prompts to the user at runtime.

$ make create

$ make validate

http://35.225.188.57:8080/

Generating Logs

To get the URL for the application page, perform the following steps:

- In the GCP console, from the Navigation menu, go to the Networking section and click on Network services.

- On the default Load balancing page, click on the TCP load balancer that was set up.

- On the Load balancer details page the top section labeled Frontend.

- Copy the IP:Port URL value. Open a new browser and paste the URL. The browser should return a screen that looks similar to the following:

Тот же самый линк вывела команда make validate

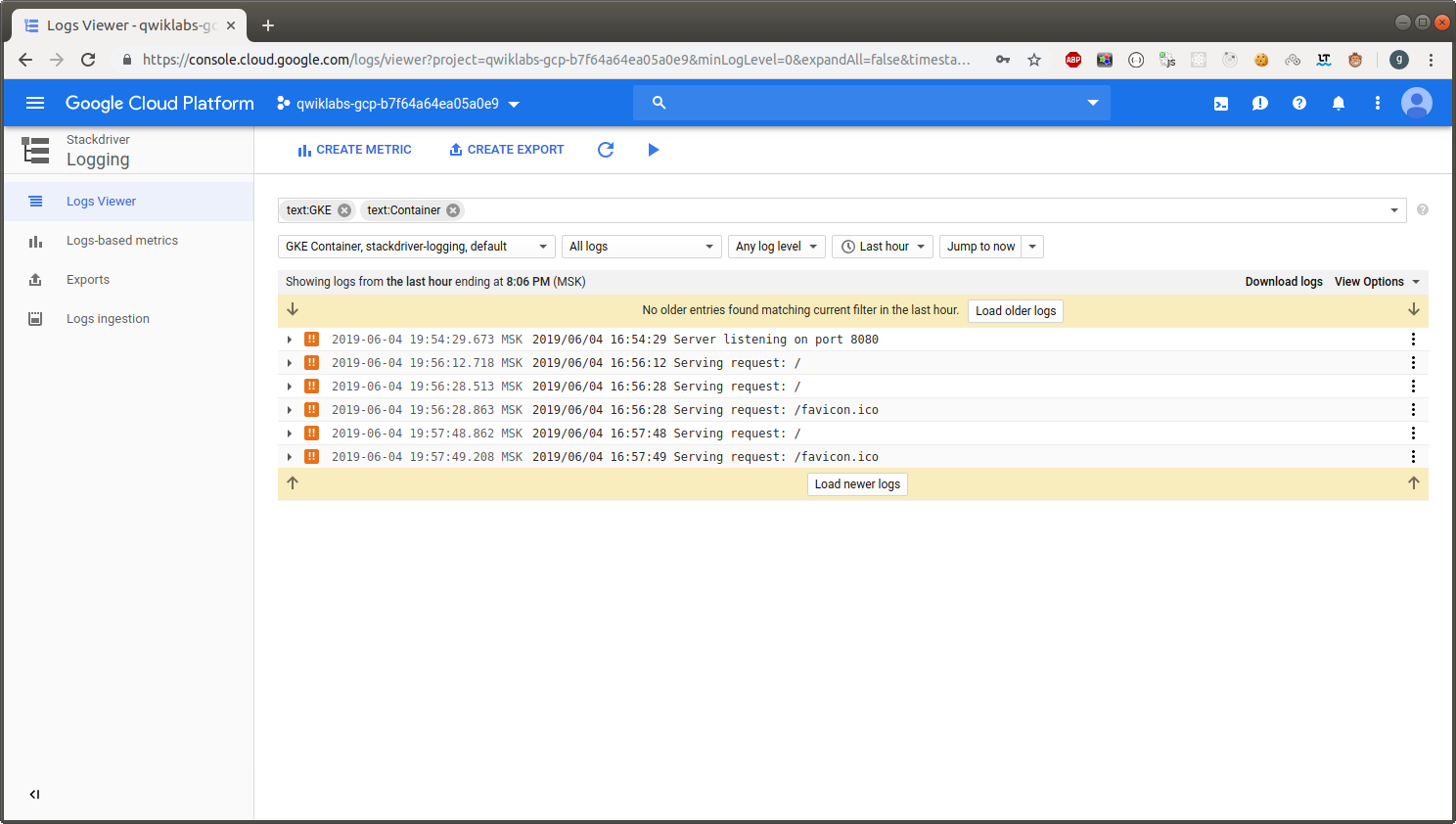

Logs in Stackdriver

Stackdriver –> Logging

filter –> GKE Container > stackdriver-logging > default

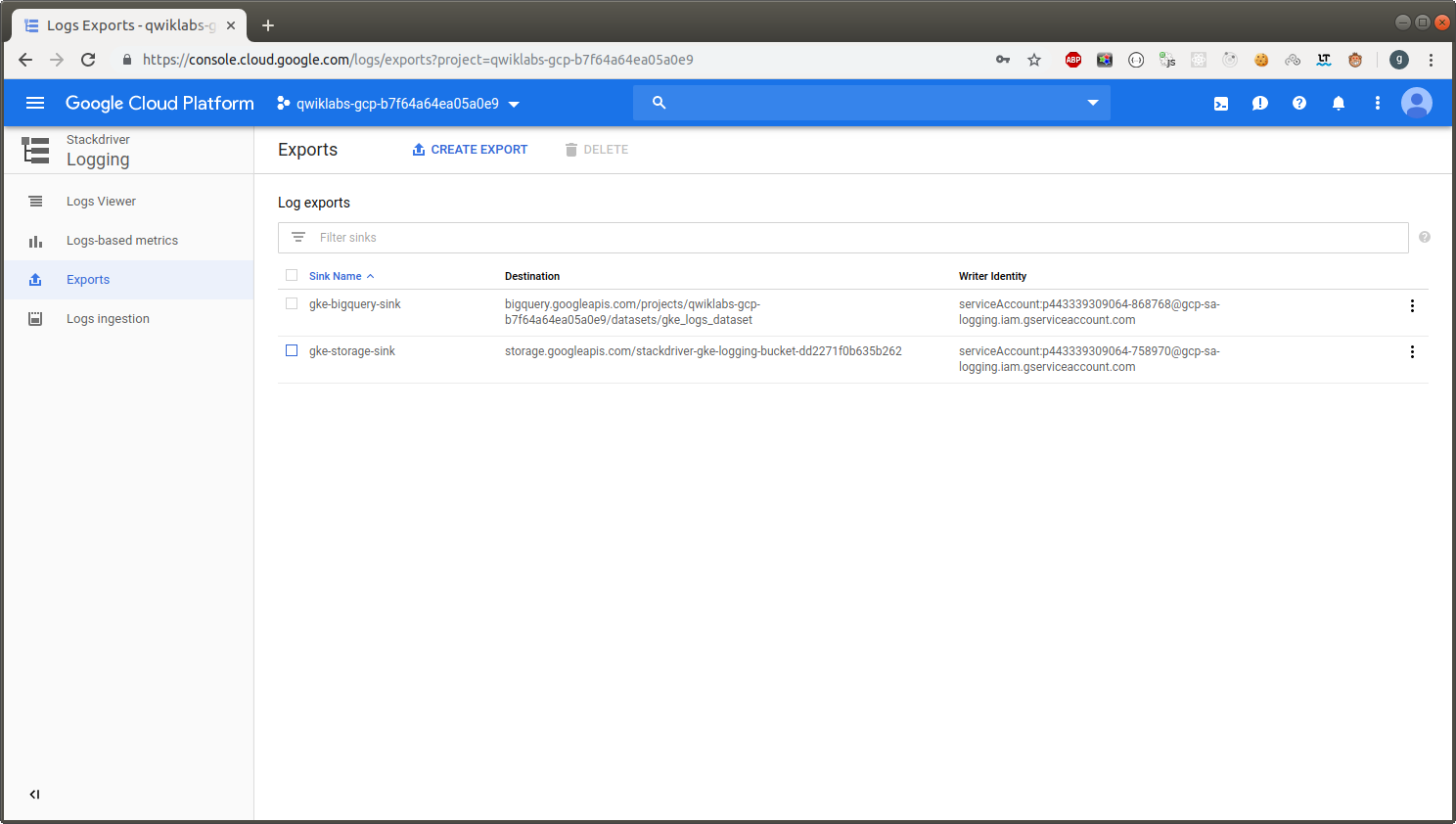

Viewing Log Exports

The Terraform configuration built out two Log Export Sinks. To view the sinks perform the following steps:

-

you should still be on the Stackdriver -> Logging page.

-

In the left navigation menu, click on the Exports menu option.

-

This will bring you to the Exports page. You should see two Sinks in the list of log exports.

-

You can edit/view these sinks by clicking on the context menu (three dots) to the right of a sink and selecting the Edit sink option.

-

Additionally, you could create additional custom export sinks by clicking on the Create Export option in the top of the navigation window.

Logs in Cloud Storage

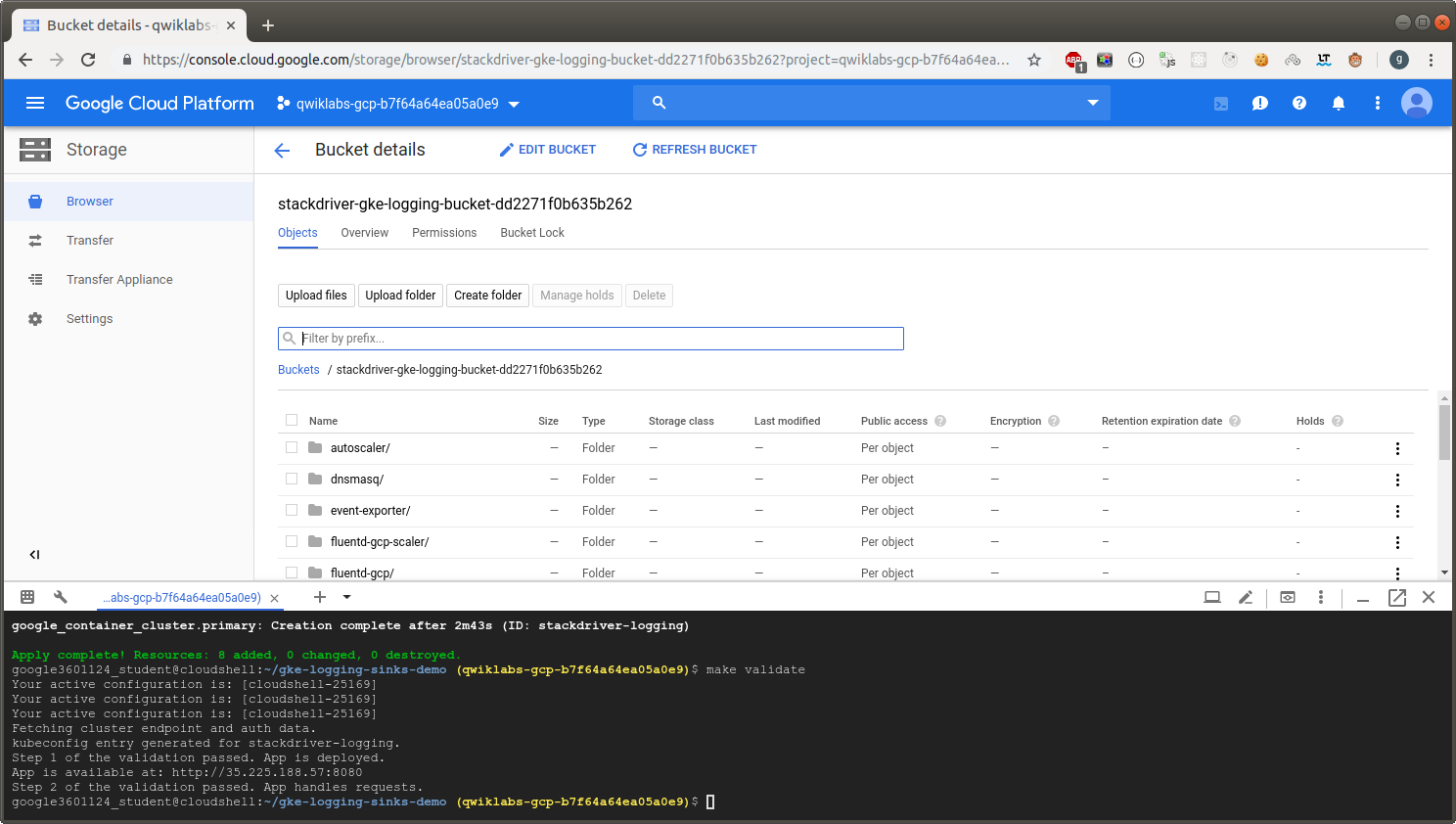

The Terraform configuration created a Cloud Storage Bucket named stackdriver-gke-logging- to which logs will be exported for medium to long-term archival.

Navigation menu –> Storage –> stackdriver-gke-logging-

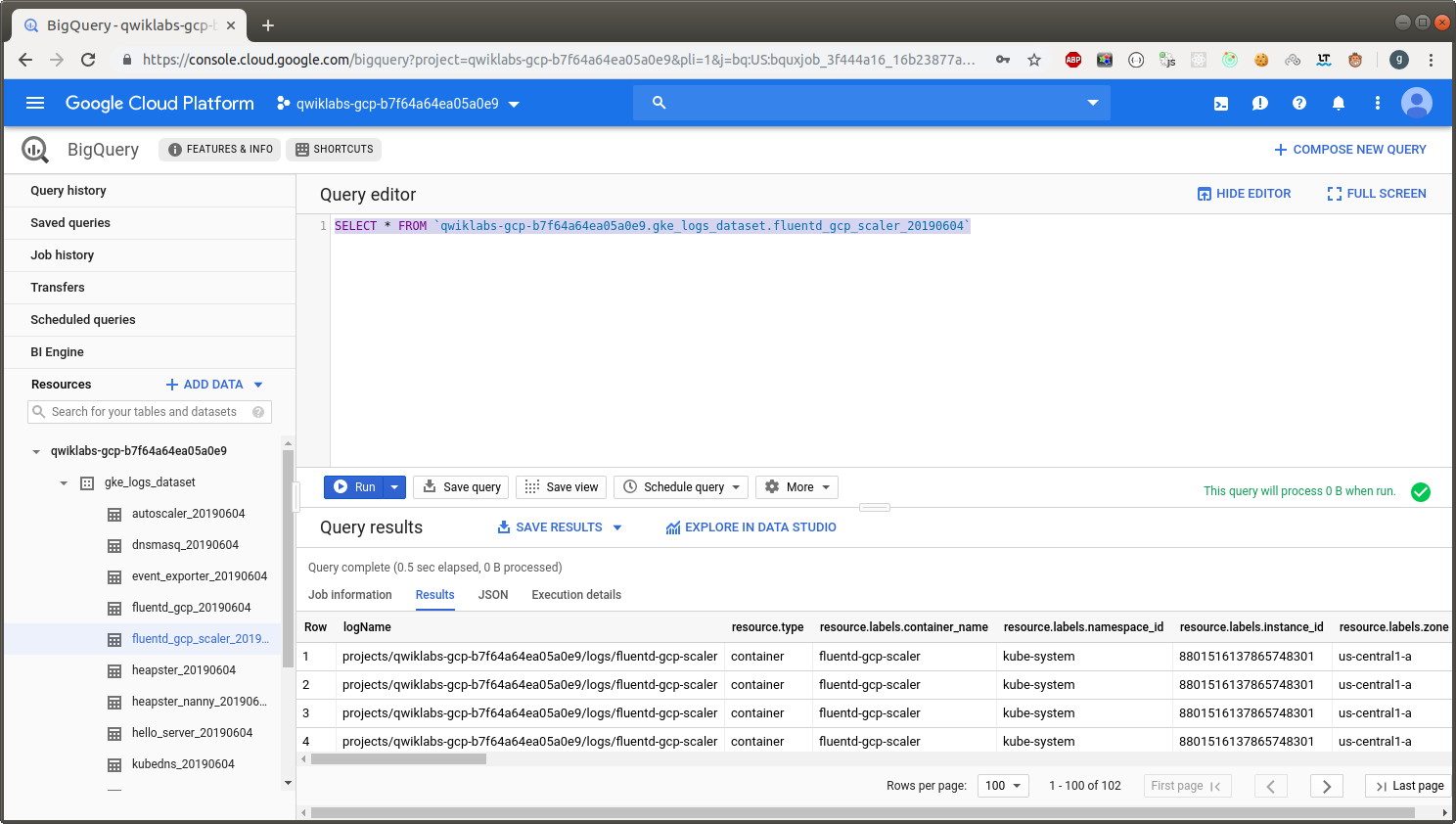

Logs in BigQuery

The Terraform configuration will create a BigQuery DataSet named gke_logs_dataset. This dataset will be setup to include all Kubernetes Engine related logs for the last hour.

Navigation menu –> BigQuery –> gke_logs_dataset

Query Table –>

SELECT * FROM `qwiklabs-gcp-b7f64a64ea05a0e9.gke_logs_dataset.fluentd_gcp_scaler_20190604`