GitOps

Привет!

Если копаете тему devops / gitops / secops / sysops / mlops / aiops и д.р., давайте обсуждать и делиться знаниями.

Группа в телеграм https://t.me/techline_ru

Можете предложить что-то интересное для изучения!

[Book][Natale Vinto] GitOps Cookbook: Kubernetes Automation in Practice [ENG, 2023]

Предлагаю разбирать, улучшать, обновлять. Вроде интересная книга. Есть что позапускать. Часть конфигов только в pdf и не все работает. Прислыайте толковые PR.

[Book][Joel Lord] Building CI/CD Systems Using Tekton: Develop flexible and powerful CI/CD pipelines using Tekton Pipelines and Triggers [ENG, 2021]

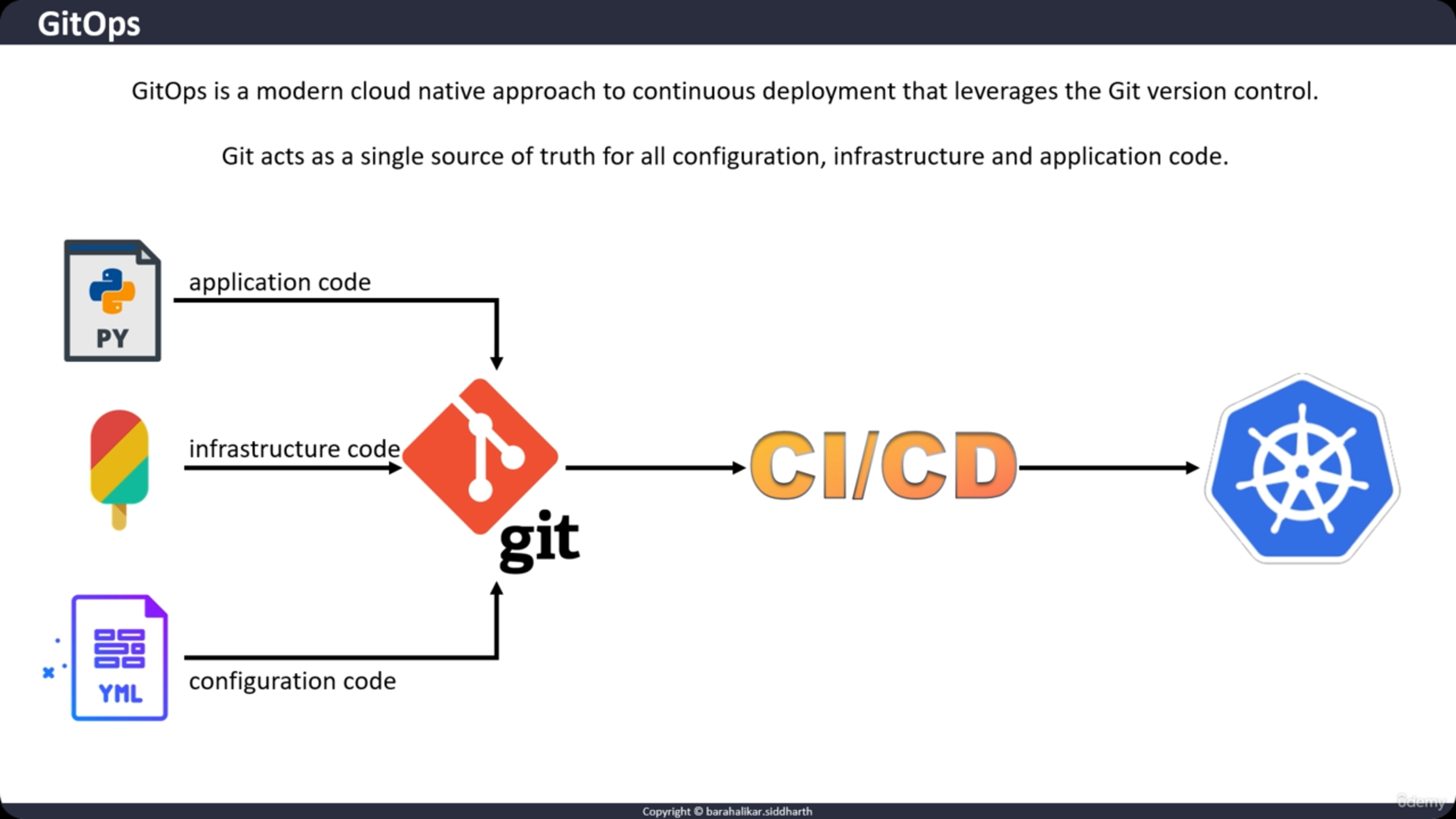

[YouTube] What is GitOps, How GitOps works and Why it’s so useful [ENG]